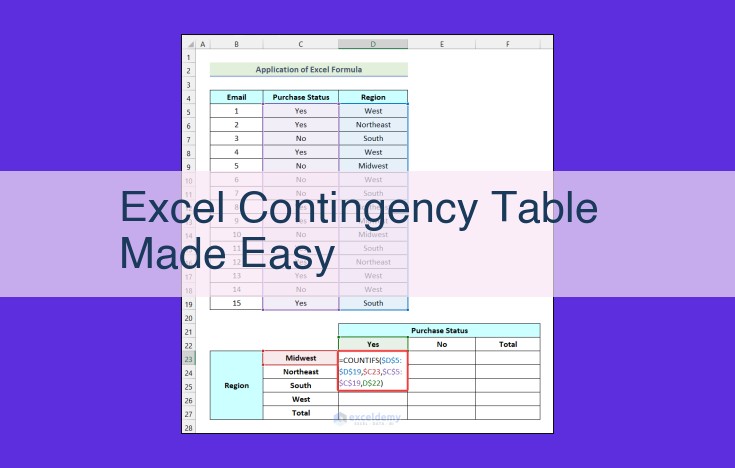

Excel for Contingency Tables Made Easy: This comprehensive guide simplifies the creation and analysis of contingency tables in Excel. It covers everything from understanding the concepts of contingency tables and the chi-square test to calculating degrees of freedom, expected frequencies, and relative frequencies. With step-by-step instructions and practical examples, this resource empowers users to effectively analyze categorical data and draw meaningful insights.

Contingency Tables: A Simplified Guide for Statistical Analysis

When it comes to understanding the relationships between categorical variables, contingency tables come to the rescue. Imagine a table with rows and columns, where each cell represents the count of observations that fall into specific categories of two or more variables. These tables provide a clear and concise way to visualize and analyze data, making them a valuable tool in various research domains.

The purpose of contingency tables is to determine if there is an association or dependence between the variables. For instance, if you have data on gender and income level, you could use a contingency table to see if there’s a connection between these two factors. If you observe a significant association, it suggests that knowing one variable’s value can provide insights into the other variable’s likelihood.

**Understanding the Chi-Square Test: Unveiling Relationships Between Categorical Variables**

In the realm of statistics, relationships between categorical variables often hide in plain sight. Enter the Chi-square test, a statistical superhero that comes to the rescue, helping us uncover these elusive connections.

What is the Chi-Square Test?

The Chi-square test is a non-parametric test that assesses the association between two or more categorical variables. It does this by comparing the observed frequencies of outcomes to the expected frequencies that would occur if the variables were independent.

Delving into the Independence Assumption

The Chi-square test assumes that the categorical variables being examined are independent. This means that the outcome of one variable does not influence the outcome of the other. Imagine a survey asking people about their favorite color and their preferred pet. If the variables were independent, the person’s favorite color would have no bearing on their choice of pet.

Calculating Observed and Expected Frequencies

The observed frequencies are the actual counts of outcomes in each cell of the contingency table. Expected frequencies, on the other hand, are hypothetical counts based on the assumption of independence. If the observed and expected frequencies differ significantly, it suggests that the variables are not independent and there is some form of association between them.

Detecting Patterns and Making Connections

The Chi-square test calculates a test statistic, which quantifies the discrepancy between the observed and expected frequencies. A higher test statistic indicates a stronger association between the variables. By examining the test statistic and its associated p-value, statisticians can determine whether the association is statistically significant.

Unveiling Hidden Relationships

The Chi-square test is a powerful tool for exploring relationships between categorical variables. It can uncover patterns that might otherwise remain hidden, allowing researchers to make meaningful connections and gain deeper insights into their data.

Calculating Degrees of Freedom for Contingency Tables

In the realm of statistical analysis, contingency tables are invaluable tools for exploring associations between categorical variables. One crucial element in the analysis of these tables is understanding the concept of degrees of freedom.

Degrees of freedom represent the number of independent pieces of information available in a dataset. For contingency tables, the degrees of freedom are directly related to the table’s dimensions, measured in terms of rows and columns.

Calculating Degrees of Freedom

The formula for calculating degrees of freedom (df) for a contingency table is as follows:

df = (Number of Rows - 1) × (Number of Columns - 1)

Let’s illustrate this concept with an example. Suppose we have a 2×3 contingency table (two rows and three columns). The degrees of freedom for this table would be calculated as:

df = (2 - 1) × (3 - 1) = 2

Interpretation of Degrees of Freedom

Degrees of freedom play a pivotal role in statistical inference. They help determine the critical values for hypothesis testing and the appropriate probability distribution to use. In the context of contingency tables, degrees of freedom indicate the number of independent comparisons that can be made after adjusting for row and column totals.

Significance of Degrees of Freedom

The number of degrees of freedom in a contingency table has implications for the Chi-square test, which is commonly used to assess the association between categorical variables. A higher number of degrees of freedom generally leads to a stricter significance test, requiring a larger Chi-square statistic to achieve statistical significance.

By understanding the concept of degrees of freedom, researchers can accurately interpret the results of their contingency table analyses and make informed conclusions about the associations between the variables under investigation.

Determining Expected Frequencies in Contingency Tables

In the realm of statistics, contingency tables play a crucial role in analyzing the relationships between categorical variables. When examining these tables, a key step is calculating the expected frequencies. These values provide a baseline for comparison with the observed frequencies, ultimately aiding in determining the strength and direction of any associations.

The Concept of Independence

The foundation of expected frequency calculation lies in the assumption of independence. This means that the occurrence of one event does not influence the likelihood of another. In the context of contingency tables, this assumption implies that the rows and columns are independent of each other.

Calculating Expected Frequencies

With the assumption of independence in place, the expected frequency for each cell in the table can be calculated using the following formula:

Expected frequency = (Row Total × Column Total) / Grand Total

To illustrate, consider a contingency table analyzing the relationship between gender and smoking status. The following table shows the observed frequencies:

| Gender | Smoker | Non-Smoker | Total |

|---|---|---|---|

| Male | 50 | 100 | 150 |

| Female | 25 | 75 | 100 |

| Total | 75 | 175 | 250 |

To calculate the expected frequency for the cell representing male smokers, we would use the following formula:

Expected frequency = (150 × 75) / 250 = 45

Purpose of Expected Frequencies

Expected frequencies serve as a reference point for comparing observed frequencies. If the observed frequency for a cell is significantly different from the expected frequency, it suggests that the variables in that cell are not independent and that there may be an association between them.

In the example above, if the observed frequency for male smokers is significantly lower than the expected frequency of 45, it would indicate that smoking is less prevalent among males than would be expected by chance. This finding would suggest that there may be factors associated with being male that discourage smoking.

Understanding expected frequencies is essential for conducting thorough and meaningful analysis using contingency tables. They provide a benchmark against which observed frequencies are compared, allowing researchers to draw informed conclusions about the relationships between categorical variables.

Examining Observed Frequencies

Observed frequencies are the actual number of times each combination of categories occurs in a data set. They are different from expected frequencies, which are the number of times each combination would occur if the variables were independent.

The difference between observed and expected frequencies is important because it can tell us whether or not the variables are associated. If the observed frequencies are very different from the expected frequencies, it suggests that the variables are associated. This could be because one variable affects the other, or because they are both influenced by a third variable.

In order to determine whether or not the observed frequencies are significantly different from the expected frequencies, we can use a statistical test such as the chi-square test. The chi-square test compares the observed frequencies to the expected frequencies and calculates a probability value. A probability value below 0.05 indicates that the observed frequencies are significantly different from the expected frequencies, and that the variables are associated.

Calculating Relative Frequencies: Unlocking the Significance of Variable Relationships

In our exploration of contingency tables and assessing relationships between categorical variables, we delve deeper into the world of relative frequencies. Relative frequencies provide invaluable insights into the strength and direction of these relationships.

Understanding Relative Frequency

Relative frequency is a statistical measure that represents the proportion of a particular category relative to the total number of observations within a contingency table. It is often expressed as a percentage or decimal value.

Calculating Relative Frequency

To calculate the relative frequency of a specific category, divide the observed frequency of that category by the total number of observations. The result represents the proportion of cases that fall into that category.

Relative Frequency = Observed Frequency / Total Number of Observations

Significance of Relative Frequency

Relative frequencies are crucial for understanding the significance of variable relationships. By comparing the relative frequencies of different categories, we can assess the extent to which one variable influences the distribution of another. Higher relative frequencies indicate a stronger relationship, while lower relative frequencies suggest a weaker relationship.

Example

Consider a contingency table that examines the relationship between gender and car ownership. The relative frequency of females who own cars can be calculated by dividing the observed number of females who own cars by the total number of females in the sample. A high relative frequency would suggest that gender has a significant impact on car ownership, with females being more likely to own cars.

Relative frequencies provide a powerful tool for assessing the strength and direction of relationships between categorical variables. By calculating relative frequencies, researchers can gain valuable insights into how different variables influence each other’s distribution, unlocking a deeper understanding of data patterns.

Converting to Percentages: Unveiling Data Proportions

In the realm of data analysis, percentages play a pivotal role in painting a clearer picture of our findings. By converting raw frequencies into percentages, we gain a deeper understanding of the relative proportions of data within our contingency tables. Percentages allow us to compare different categories and draw meaningful conclusions about the relationships between them.

To illustrate this concept, let’s consider a contingency table examining the relationship between gender and voting preference in a recent election. The raw frequencies show that 52% of female voters supported Candidate A, while 48% supported Candidate B. Similarly, among male voters, 60% voted for Candidate A, and 40% voted for Candidate B.

By converting these frequencies to percentages, we can more easily see the proportion of voters in each gender who supported each candidate. We find that among female voters, Candidate A received a slightly greater share of the vote (52%) compared to Candidate B (48%). Among male voters, the gap is wider, with Candidate A securing a clear majority of 60%, while Candidate B received only 40%.

These percentages provide valuable insights into the voting patterns of different demographic groups. They allow us to quantify the preferences of each gender and compare them directly. Moreover, by expressing frequencies as percentages, we can easily compare data across different contingency tables, even if they have varying sample sizes.

In summary, converting frequencies to percentages is a crucial step in data analysis. It helps us to understand the relative proportions of data, compare categories, and draw meaningful conclusions from our contingency tables. By using percentages, we can gain a clearer picture of our findings and make informed decisions based on the data we have gathered.